CNPG(CloudNative PG)란?

PostgreSQL Operator로 Operator Hub에서도 최고 레벨 5 AutoPilot 엄청나게 단계에 있는 완성도 있는 오픈소스이다

이름에서도 알 수 있듯이 Cloud Natvie 하게 동작하며 아래와 같은 기능들을 제공하며 운영에 필요한 대부분의 것들을 자동화하였음

Main features

- Direct integration with Kubernetes API server for High Availability, without requiring an external tool

- Self-Healing capability, through:

- Planned switchover of the primary instance by promoting a selected replica

- Scale up/down capabilities

- Definition of an arbitrary number of instances (minimum 1 - one primary server)

- Definition of the read-write service, to connect your applications to the only primary server of the cluster

- Definition of the read-only service, to connect your applications to any of the instances for reading workloads

- Declarative management of PostgreSQL configuration, including certain popular Postgres extensions through the cluster spec: pgaudit, auto_explain, pg_stat_statements, and pg_failover_slots

- Declarative management of Postgres roles, users and groups

- Support for Local Persistent Volumes with PVC templates

- Reuse of Persistent Volumes storage in Pods

- Separate volume for WAL files

- Rolling updates for PostgreSQL minor versions

- In-place or rolling updates for operator upgrades

- TLS connections and client certificate authentication

- Support for custom TLS certificates (including integration with cert-manager)

- Continuous WAL archiving to an object store (AWS S3 and S3-compatible, Azure Blob Storage, and Google Cloud Storage)

- Backups on volume snapshots (where supported by the underlying storage classes)

- Backups on object stores (AWS S3 and S3-compatible, Azure Blob Storage, and Google Cloud Storage)

- Full recovery and Point-In-Time recovery from an existing backup on volume snapshots or object stores

- Offline import of existing PostgreSQL databases, including major upgrades of PostgreSQL

- Fencing of an entire PostgreSQL cluster, or a subset of the instances in a declarative way

- Hibernation of a PostgreSQL cluster in a declarative way

- Support for Synchronous Replicas

- Support for HA physical replication slots at cluster level

- Backup from a standby

- Backup retention policies (based on recovery window, only on object stores)

- Parallel WAL archiving and restore to allow the database to keep up with WAL generation on high write systems

- Support tagging backup files uploaded to an object store to enable optional retention management at the object store layer Replica clusters for

- PostgreSQL deployments across multiple Kubernetes clusters, enabling private, public, hybrid, and multi-cloud architectures

- Connection pooling with PgBouncer

- Support for node affinity via nodeSelector

- Native customizable exporter of user defined metrics for Prometheus through the metrics port (9187)

- Standard output logging of PostgreSQL error messages in JSON format

- Automatically set readOnlyRootFilesystem security context for pods

- cnpg plugin for kubectl

- Simple bind and search+bind LDAP client authentication

- Multi-arch format container images

- OLM installation

CloudNativePG 구성

CloudNativePG 인스턴스 역할

아래 3가지 방식의 인스턴스의 역할이 존재

- rw: 읽기/쓰기를 지원하는 primary DB인스턴스

- ro: 읽기를 지원 standby DB인스턴스

- r: 읽기를 지원 any(Primary, Standby 모두를 포함) DB인스턴스

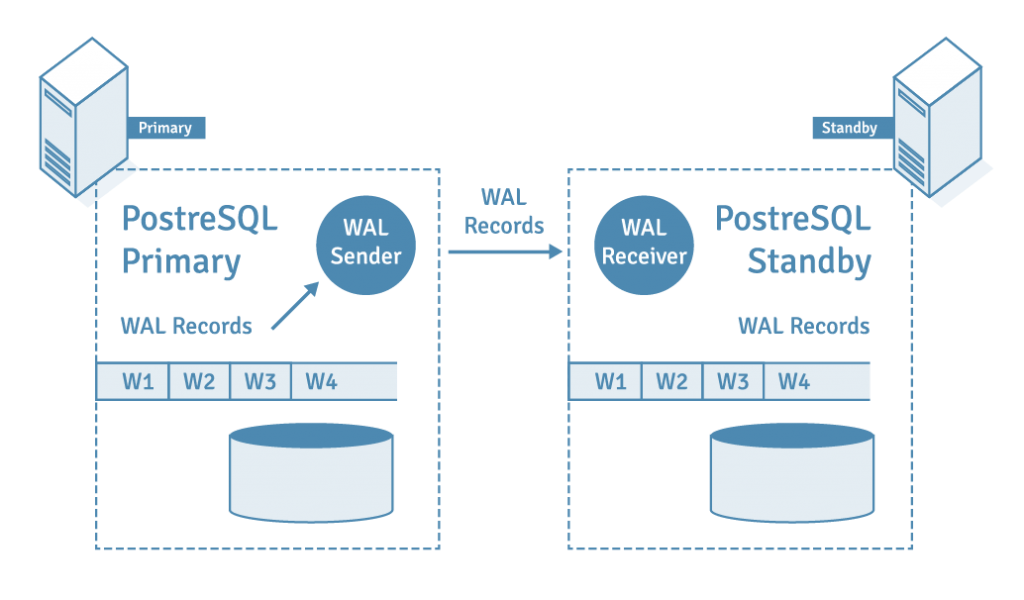

CloudNativePG 복제 구성

WAL 방식을 통한 데이터베이스 복제를 하고, 동기/비동기 복제 방식을 제공한다.

WAL(Write Ahead Logging)이란

WSL은 데이터의 모든 변경사항을 로그파일에 기록하여 데이터의 일관성을 유지한다.

만약 특정 작업을 수행하다가 예기치 못한 장애가 발생한다고 하여도, 로그 파일을 기반으로 이전에 커밋되지 않는 트랜잭션을 재수 행하여 데이터의 무결성을 유지한다.

Postgresql 뿐만이 아니라 , sqlite, mongodb, hbase 등의 데이터베이스에서 중요하게 사용되는 기술이다.

CloudNativePG 설치

OLM(Operator Lifecycle Manger)은 Operator 설치를 용이하게 해 주며 상태 관리 등 을 도와주는 도구이다.

# OLM(Operator Lifecycle Manager) 설치

curl -sL https://github.com/operator-framework/operator-lifecycle-manager/releases/download/v0.25.0/install.sh | bash -s v0.25.0

# OLM 리소스 확인

kubectl get ns

kubectl get all -n olm

kubectl get-all -n olm

kubectl get all -n operators

kubectl get-all -n operators | grep -v packagemanifest

# CloudNativePG 설치

curl -s -O https://operatorhub.io/install/cloudnative-pg.yaml

cat cloudnative-pg.yaml

apiVersion: operators.coreos.com/v1alpha1 ││

kind: Subscription ││

metadata: ││

name: my-cloudnative-pg ││

namespace: operators ││

spec: ││

channel: stable-v1 ││

name: cloudnative-pg ││

source: operatorhubio-catalog ││

sourceNamespace: olmroot@platform-k8s-master-2:~/spjang/postgres#

kubectl create -f cloudnative-pg.yaml

# CloudNativePG 리소스 확인

kubectl get all -n operators

kubectl get-all -n operators | grep -v packagemanifest

kubectl get crd | grep cnpg

backups.postgresql.cnpg.io 2023-10-29T02:47:55Z

clusters.postgresql.cnpg.io 2023-10-29T02:47:56Z

poolers.postgresql.cnpg.io 2023-10-29T02:47:56Z

scheduledbackups.postgresql.cnpg.io 2023-10-29T02:47:56Z

platform-k8s-master-2:~/spjang/postgres# kubectl get clusterserviceversions -n operators

NAME DISPLAY VERSION REPLACES PHASE ││

cloudnative-pg.v1.21.0 CloudNativePG 1.21.0 Succeeded

# CloudNativePG Cluster 설치

# 3대 파드 구성(Priamry 1, Standby 2),config parameters 설정, pg_hba 설정, bootstrap 설정, 파드 모니터 설정

cat <<EOT> mycluster1.yaml

# Example of PostgreSQL cluster

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: mycluster

spec:

imageName: ghcr.io/cloudnative-pg/postgresql:15.3

instances: 3

storage:

size: 3Gi

postgresql:

parameters:

max_worker_processes: "40"

timezone: "Asia/Seoul"

pg_hba:

- host all postgres all trust

primaryUpdateStrategy: unsupervised

enableSuperuserAccess: true

bootstrap:

initdb:

database: app

encoding: UTF8

localeCType: C

localeCollate: C

owner: app

monitoring:

enablePodMonitor: true

EOT

# 설치되는 파드 순서 확인 : job.batch(initdb -> join)

kubectl apply -f mycluster1.yaml && kubectl get pod -w

# 확인

kubectl get pod,pvc,pv,svc,ep

kubectl df-pv

kubectl describe pod -l cnpg.io/cluster=mycluster # TCP 9187 메트릭 제공

kubectl get pod -l cnpg.io/cluster=mycluster -owide

# 클러스터 확인

# get-all은 모든 리소스를 보여주는 krew 플러그인

kubectl get-all --since 7m

kubectl get cluster

NAME AGE INSTANCES READY STATUS PRIMARY

mycluster 3d9h 3 3 Cluster in healthy state mycluster-1

# 자세한 클러스터의 상태 정보를 보여주는 cnpg krew 플러그인도 지원

kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: cnpg

System ID: 7297627656490033175

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-1

Primary start time: 2023-11-04 15:24:03 +0000 UTC (uptime 4m51s)

Status: Cluster in healthy state

Instances: 3

Ready instances: 3

Current Write LSN: 0/6000060 (Timeline: 1 - WAL File: 000000010000000000000006)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-02 15:17:15 +0000 UTC 89.99

mycluster-replication 2024-02-02 15:17:15 +0000 UTC 89.99

mycluster-server 2024-02-02 15:17:15 +0000 UTC 89.99

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/6000060 0/6000060 0/6000060 0/6000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

mycluster-3 0/6000060 0/6000060 0/6000060 0/6000060 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-1 29 MB 0/6000060 Primary OK BestEffort 1.21.0 platform-k8s-worker-3

mycluster-2 29 MB 0/6000060 Standby (async) OK BestEffort 1.21.0 platform-k8s-worker-4

mycluster-3 29 MB 0/6000060 Standby (async) OK BestEffort 1.21.0 platform-k8s-worker-2

CloudNative PG는 Statefulset이 존재하지 않는다?

kubectl get pod,sts,deployment -n cnpg

kubectl get pod,sts,deployment -n cnpg

NAME READY STATUS RESTARTS AGE

pod/mycluster-1 1/1 Running 0 4m2s

pod/mycluster-2 1/1 Running 0 2m40s

pod/mycluster-3 1/1 Running 0 70s

CNPG의 리소스를 살펴보면 데이터베이스가 일반적으로 배포되는 방식인 스테이트풀셋이 pod로만 구성이 되어있는 것을 볼 수 있다.

스테이트 풀셋은 다음과 같은 제약사항들이 존재한다.

- 스테이트풀셋 당 하나의 파드 템플릿만을 사용

- PVC 크기 조정 불가

- 배포 전략 고정

즉, CNPG는 제약사항에서 벗어나 스테이트풀셋의 의존하지 않고 Postgres 인스턴스를 유연하게 관리하기 위해서 Custom Pod Controller라는 것을 이용한다.

아래와 같은 다양한 기능들을 제공한다. ( 자세하게 알고 싶다면 "Custom Pod Controller를 참고 )

- PVC 리사이징( PVC resizing)

- 인스턴스 복제(Primary Instances versus Replicas)

- PVC 일관성 유지(coherence of PVCs)

데이터 지속성 관리를 위해 스테이트풀셋을 사용하지 않고, Custom Pod Controller라는 것을 사용하여 PVC를 직접 관리한다.

CloudNtaivePG 기본 사용

postsql접속을 위해 client 파드를 생성해 보자

# 계정정보 secret 확인

kubectl get secret -l cnpg.io/cluster=mycluster

NAME TYPE DATA AGE

mycluster-app kubernetes.io/basic-auth 9 9m14s

mycluster-superuser kubernetes.io/basic-auth 9 9m14s

# superuser 계정명

kubectl get secrets mycluster-superuser -o jsonpath={.data.username} | base64 -d ;echo

postgres

# superuser 계정 암호

kubectl get secrets mycluster-superuser -o jsonpath={.data.password} | base64 -d ;echo

UUTWc0Apwp8i0BbXd06ja6B4LURuFPAsuSlV6XKzoebMqEksQdANdNF0cPlQxio0

# app 계정명

kubectl get secrets mycluster-app -o jsonpath={.data.username} | base64 -d ;echo

app

# app 계정 암호

kubectl get secrets mycluster-app -o jsonpath={.data.password} | base64 -d ;echo

Nt9J8MogPgzwMj9pqVI9xg0TqAvyRgTsunxOTk5icI4yXWCUiW9T8WHBgh1ZtIJZ

# app 계정 암호 변수 지정

AUSERPW=$(kubectl get secrets mycluster-app -o jsonpath={.data.password} | base64 -d)

# PG 클라이언트 파드 3대 배포: envsubst 활용

## PODNAME=myclient1 VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f -

curl -s https://raw.githubusercontent.com/gasida/DOIK/main/5/myclient-new.yaml -o myclient.yaml

for ((i=1; i<=3; i++)); do PODNAME=myclient$i VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f - ; done

# [myclient1] superuser 계정으로 mycluster-rw 서비스 접속

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 --variable=HISTFILE=/tmp/.psql_history

postgres=# \conninfo

You are connected to database "postgres" as user "postgres" on host "mycluster-rw" (address "10.233.16.62") at port "5432".

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

# 데이터베이스 조회

postgres=# \l

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+---------+-------+-----------------------

app | app | UTF8 | C | C |

postgres | postgres | UTF8 | C | C |

template0 | postgres | UTF8 | C | C | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | C | C | =c/postgres +

| | | | | postgres=CTc/postgres

(4 rows)

# 타임존 확인

postgres=# SELECT * FROM pg_timezone_names WHERE name = current_setting('TIMEZONE');

name | abbrev | utc_offset | is_dst

------------+--------+------------+--------

Asia/Seoul | KST | 09:00:00 | f

(1 row)

# 빠져나오기

postgres=# \q

# [myclient1] superuser 계정으로 mycluster-rw 서비스 접속하여 데이터베이스 리스트 조회

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -

# [myclient1] app 계정으로 mycluster-rw 서비스 접속하여 app 데이터베이스 이동 >> app 계정 암호 직접 입력

kubectl exec -it myclient1 -- psql -U app -h mycluster-rw -p 5432 -d app -W --variable=HISTFILE=/tmp/.psql_history

Nt9J8MogPgzwMj9pqVI9xg0TqAvyRgTsunxOTk5icI4yXWCUiW9T8WHBgh1ZtIJZ

-------------

app=> \conninfo

You are connected to database "app" as user "app" on host "mycluster-rw" (address "10.233.16.62") at port "5432".

SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, compression: off)

app=> \l

app=> \dt

app=> \q

CloudNative PG 복제

테스트를 위한 DVD Rental 샘플 데이터베이스 생성

# 다운로드

curl -LO https://www.postgresqltutorial.com/wp-content/uploads/2019/05/dvdrental.zip

unzip dvdrental.zip

# myclient1 파드에 dvdrental.tar 복사

kubectl cp dvdrental.tar myclient1:/tmp

# [myclient1] superuser 계정으로 mycluster-rw 서비스 접속 후 데이터베이스 생성

kubectl exec -it myclient1 -- createdb -U postgres -h mycluster-rw -p 5432 dvdrental

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -l

# DVD Rental Sample Database 불러오기

kubectl exec -it myclient1 -- pg_restore -U postgres -d dvdrental /tmp/dvdrental.tar -h mycluster-rw -p 5432

# DVD Rental Sample Database 에서 actor 테이블 조회

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d dvdrental -c "SELECT * FROM actor"

각 파드에 접근해서 DVD Rental 샘플 데이터 복제 동기화 확인

# 각각 mycluster-ro 와 mycluster-r(mycluster-any) 서비스에 접속해보자

kubectl get svc,ep,endpointslices -l cnpg.io/cluster=mycluster

# 파드IP 변수 지정

POD1=$(kubectl get pod mycluster-1 -o jsonpath={.status.podIP})

POD2=$(kubectl get pod mycluster-2 -o jsonpath={.status.podIP})

POD3=$(kubectl get pod mycluster-3 -o jsonpath={.status.podIP})

# 파드별 actor 테이블 카운트 조회

kubectl exec -it myclient1 -- psql -U postgres -h $POD1 -p 5432 -d dvdrental -c "SELECT COUNT(*) FROM actor"

kubectl exec -it myclient1 -- psql -U postgres -h $POD2 -p 5432 -d dvdrental -c "SELECT COUNT(*) FROM actor"

kubectl exec -it myclient1 -- psql -U postgres -h $POD3 -p 5432 -d dvdrental -c "SELECT COUNT(*) FROM actor"

# (참고) 각 서비스 접근 조회

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d dvdrental -c "SELECT * FROM actor"

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d dvdrental -c "SELECT * FROM actor"

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-r -p 5432 -d dvdrental -c "SELECT * FROM actor"

rw vs ro vs r 차이 확인

# rw

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -c "select inet_server_addr();"

for i in {1..30}; do kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -c "select inet_server_addr();"; done | sort | uniq -c | sort -nr | grep 192

# ro

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -c "select inet_server_addr();"

for i in {1..30}; do kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -c "select inet_server_addr();"; done | sort | uniq -c | sort -nr | grep 192

# r

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-r -p 5432 -c "select inet_server_addr();"

for i in {1..30}; do kubectl exec -it myclient1 -- psql -U postgres -h mycluster-r -p 5432 -c "select inet_server_addr();"; done | sort | uniq -c | sort -nr | grep 192

CloudNativePG 장애 테스트

장애 테스트를 위한 준비

장애 테스트를 위한 샘플 데이터를 INSERT 한다.

# 파드IP 변수 지정

POD1=$(kubectl get pod mycluster-1 -o jsonpath={.status.podIP})

POD2=$(kubectl get pod mycluster-2 -o jsonpath={.status.podIP})

POD3=$(kubectl get pod mycluster-3 -o jsonpath={.status.podIP})

# query.sql

curl -s -O https://raw.githubusercontent.com/gasida/DOIK/main/5/query.sql

cat query.sql ;echo

CREATE DATABASE test;

\c test;

CREATE TABLE t1 (c1 INT PRIMARY KEY, c2 TEXT NOT NULL);

INSERT INTO t1 VALUES (1, 'Luis');

# SQL 파일 query 실행

kubectl cp query.sql myclient1:/tmp

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -f /tmp/query.sql

# [터미널2] 모니터링

while true; do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"; date;sleep 1; done

# 확인

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT * FROM t1"

# INSERT

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES (2, 'Luis2');"

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT * FROM t1"

# test 데이터베이스에 97개의 데이터 INSERT

#for ((i=3; i<=100; i++)); do psql -U postgres -h $POD1 -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

for ((i=3; i<=100; i++)); do kubectl exec -it myclient1 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

kubectl exec -it myclient1 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"

count

-------

100

(1 row)Case 1: DB 인스턴스(pod) 삭제 및 동작 확인

대량의 데이터를 Insert 하는 도중에 Primary DB인스턴스인 mycluster-1 파드를 삭제해 보고 결과를 확인해 보자.

# 프라이머리 파드 정보 확인

kubectl cnpg status mycluster

# [터미널1] 모니터링

watch kubectl get pod -l cnpg.io/cluster=mycluster

# [터미널2] 모니터링

while true; do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"; date;sleep 1; done

# [터미널3] test 데이터베이스에 다량의 데이터 INSERT

for ((i=301; i<=10000; i++)); do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

for ((i=10001; i<=20000; i++)); do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

# [터미널4] 파드 삭제 >> INSERT 가 중간에 끊어지는지 확인

kubectl get pod -l cnpg.io/cluster=mycluster -owide

kubectl delete pvc/mycluster-1 pod/mycluster-1

# Primary 인스턴스 확인

kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: cnpg

System ID: 7297627656490033175

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-2

Primary start time: 2023-11-04 16:26:52 +0000 UTC (uptime 3h46m4s)

...

# 파드 정보 확인 : 파드의 이름과 배치 위치 비교 확인

kubectl get pod -l cnpg.io/cluster=mycluster -owide

kubectl get pod -l cnpg.io/cluster=mycluster

NAME READY STATUS RESTARTS AGE

mycluster-2 1/1 Running 0 125m

mycluster-3 1/1 Running 0 125m

mycluster-4 1/1 Running 0 2m18s

파드 삭제를 하여도 자동으로 FailOver 되면서 INSERT문이 끊어지지 않고 다시 정상화되는 것을 볼 수 있다.

그리고 mycluster-4라는 DB인스턴스가 새로 생기고, Primary파드 또한 mycluster-2로 변경되었다.

Case 2: 노드 drain 및 동작 확인

DB 인스턴스 파드가 동작하고 있는 노드를 drain 하고 결과를 확인한다.

# (옵션) 오퍼레이터 로그 확인

kubectl get pod -n operators -l app.kubernetes.io/name=cloudnative-pg

kubectl logs -n operators -l app.kubernetes.io/name=cloudnative-pg -f

# 워커노드 drain

# kubectl drain <<노드>> --ignore-daemonsets --delete-emptydir-data

kubectl get node

NODE=<각자 자신의 EC2 노드 이름 지정>

NODE=ip-192-168-3-231.ap-northeast-2.compute.internal

kubectl drain $NODE --delete-emptydir-data --force --ignore-daemonsets && kubectl get node -w

# 클러스터 정보 확인 : 파드가 Pending 된 주 원인은 무엇일까요? >> 예를 들어 동일AZ에 정상 워커노드가 있었다면 어떻게 될까요?

kubectl get pod -owide

kubectl cnpg status mycluster

# 동작 확인 후 uncordon 설정

kubectl uncordon $NODE

root@platform-k8s-master-2:~/spjang/postgres# kubectl cnpg status

mycluster

Cluster Summary

Name: mycluster

Namespace: cnpg

System ID: 7297627656490033175

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-3

root@platform-k8s-master-2:~/spjang/postgres# kubectl cnpg status mycluster

Cluster Summary

Name: mycluster

Namespace: cnpg

System ID: 7297627656490033175

PostgreSQL Image: ghcr.io/cloudnative-pg/postgresql:15.3

Primary instance: mycluster-3

Primary start time: 2023-11-04 20:27:18 +0000 UTC (uptime 6m3s)

Status: Waiting for the instances to become active Some instances are not yet active. Please wait.

Instances: 3

Ready instances: 2

Current Write LSN: 0/1A000110 (Timeline: 3 - WAL File: 00000003000000000000001A)

Certificates Status

Certificate Name Expiration Date Days Left Until Expiration

---------------- --------------- --------------------------

mycluster-ca 2024-02-02 15:17:15 +0000 UTC 89.78

mycluster-replication 2024-02-02 15:17:15 +0000 UTC 89.78

mycluster-server 2024-02-02 15:17:15 +0000 UTC 89.78

Continuous Backup status

Not configured

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/1A000110 0/1A000110 0/1A000110 0/1A000110 00:00:00 00:00:00 00:00:00 streaming async 0 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-3 51 MB 0/1A000110 Primary OK BestEffort 1.21.0 platform-k8s-worker-2

mycluster-2 51 MB 0/1A000110 Standby (async) OK BestEffort 1.21.0 platform-k8s-worker-2

mycluster-4 - - - pod not available BestEffort - platform-k8s-worker-4

Primary 인스턴스 파드인 mycluster-2가 동작중인 노드를 drain 하였는데 mycluster-3으로 primary가 잘 변경됨(SwitchOver)되어 을 볼 수 있다.

CloudNativePG 스케일 & 롤링 업데이트

스케일링 테스트

Instance라는 커스텀 리소스를 통해 손쉽게 스케일 할 수 있다.

# 정보 확인

kubectl cnpg status mycluster

kubectl get cluster mycluster

NAME AGE INSTANCES READY STATUS PRIMARY

mycluster 5h17m 3 3 Cluster in healthy state mycluster-3

# 모니터링

watch kubectl get pod

# 5대로 증가 : 증가 및 join 완료 후 아래 접속 확인

kubectl patch cluster mycluster --type=merge -p '{"spec":{"instances":5}}' && kubectl get pod -l postgresql=mycluster -w

kubectl cnpg status mycluster

kubectl get cluster mycluster

mycluster 46m 5 5 Cluster in healthy state mycluster-1

# any 접속 확인

for i in {1..30}; do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-r -p 5432 -c "select inet_server_addr();"; done | sort | uniq -c | sort -nr

30 inet_server_addr

30 (1 row)

30

30 ------------------

8 10.233.202.239

8 10.233.202.222

7 10.233.71.90

5 10.233.133.178

2 10.233.71.89

# 3대로 감소

kubectl patch cluster mycluster --type=merge -p '{"spec":{"instances":3}}' && kubectl get pod -l postgresql=mycluster -w

k get cluster mycluster

NAME AGE INSTANCES READY STATUS PRIMARY

mycluster 48m 3 3 Cluster in healthy state mycluster-1롤링업데이트

# [터미널1] 모니터링

watch kubectl get pod -l cnpg.io/cluster=mycluster

# [터미널2] 모니터링

while true; do kubectl exec -it myclient2 -- psql -U postgres -h mycluster-ro -p 5432 -d test -c "SELECT COUNT(*) FROM t1"; date;sleep 1; done

# [터미널3] test 데이터베이스에 다량의 데이터 INSERT

for ((i=10000; i<=20000; i++)); do kubectl exec -it myclient3 -- psql -U postgres -h mycluster-rw -p 5432 -d test -c "INSERT INTO t1 VALUES ($i, 'Luis$i');";echo; done

# [터미널4] postgresql:15.3 → postgresql:15.4 로 업데이트 >> 순서와 절차 확인

kubectl cnpg status mycluster # Primary 파드와 Image 버전 확인

kubectl patch cluster mycluster --type=merge -p '{"spec":{"imageName":"ghcr.io/cloudnative-pg/postgresql:15.4"}}' && kubectl get pod -l postgresql=mycluster -w

# 확인

kubectl get cluster mycluster

kubectl cnpg status mycluster | grep Image

롤링 업데이트르 진행하면서 postgresql:15.4로 이미지가 업데이트가 되고, 다량의 데이터를 INSERT 하는 도중에도 자동으로 복구(FailOver)가 되는 것을 볼 수 있다.

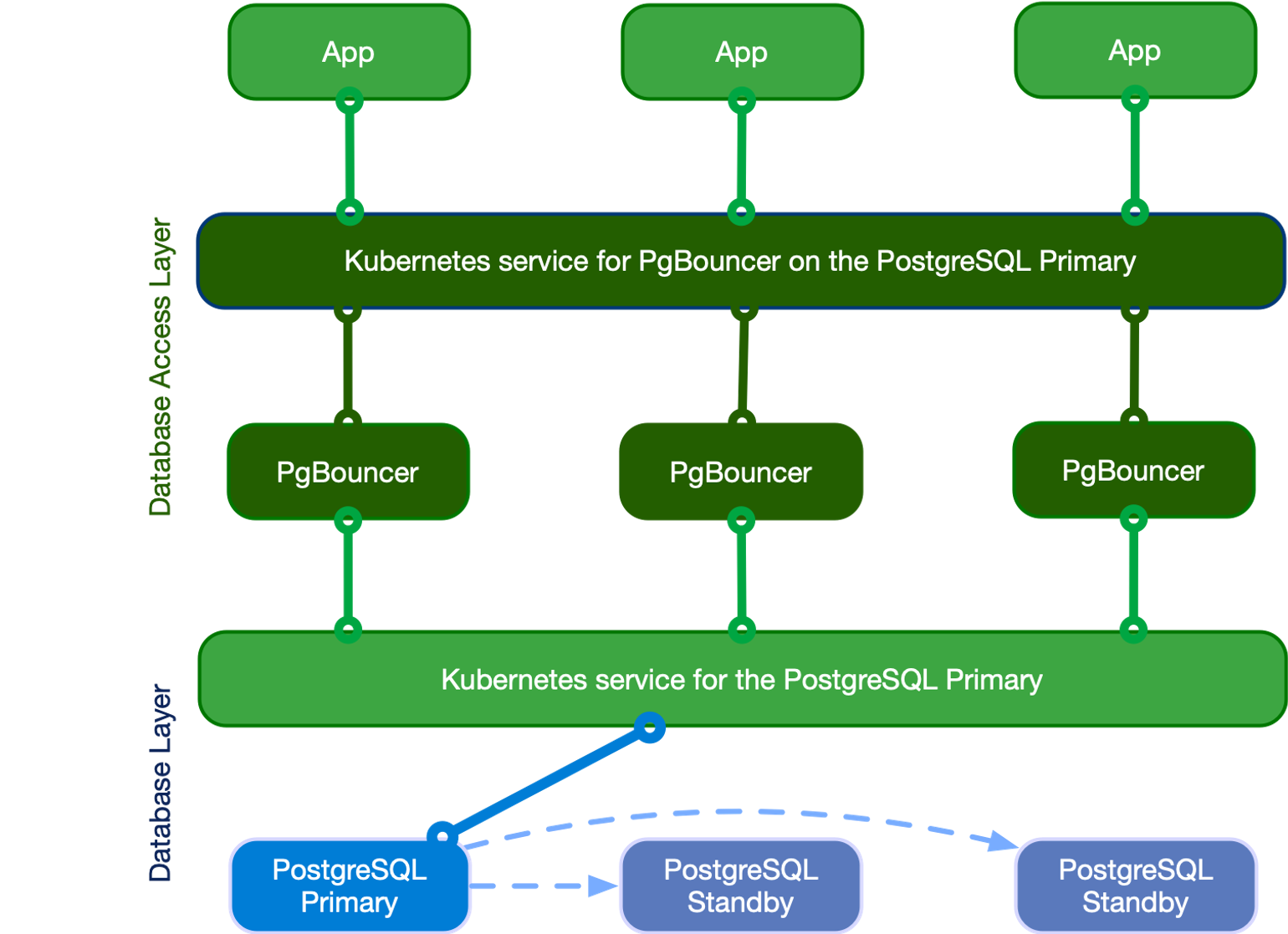

Pgbouncer

Connection Pooling을 지원하는 오픈소스이다

인증 모니터링/로깅 처리기능, 연결 재사용, 데이터 베이스 액세스 기능들을 제공한다.

설치 및 동작 확인

Pooler라는 리소스를 통해 구성됨

rw타입을 지정하였기 때문에, primary 인스턴스 파드로만 접근하는 것을 확인할 수 있다.

# 클러스터 신규 설치 : 동기 복제

cat <<EOT> mycluster2.yaml

# Example of PostgreSQL cluster

apiVersion: postgresql.cnpg.io/v1

kind: Cluster

metadata:

name: mycluster

spec:

imageName: ghcr.io/cloudnative-pg/postgresql:16.0

instances: 3

storage:

size: 3Gi

postgresql:

pg_hba:

- host all postgres all trust

enableSuperuserAccess: true

minSyncReplicas: 1

maxSyncReplicas: 2

monitoring:

enablePodMonitor: true

EOT

kubectl apply -f mycluster2.yaml && kubectl get pod -w

# 동기 복제 정보 확인

watch kubectl cnpg status mycluster

kubectl cnpg status mycluster

Streaming Replication status

Replication Slots Enabled

Name Sent LSN Write LSN Flush LSN Replay LSN Write Lag Flush Lag Replay Lag State Sync State Sync Priority Replication Slot

---- -------- --------- --------- ---------- --------- --------- ---------- ----- ---------- ------------- ----------------

mycluster-2 0/604DF70 0/604DF70 0/604DF70 0/604DF70 00:00:00 00:00:00 00:00:00 streaming quorum 1 active

mycluster-3 0/604DF70 0/604DF70 0/604DF70 0/604DF70 00:00:00 00:00:00 00:00:00 streaming quorum 1 active

Unmanaged Replication Slot Status

No unmanaged replication slots found

Instances status

Name Database Size Current LSN Replication role Status QoS Manager Version Node

---- ------------- ----------- ---------------- ------ --- --------------- ----

mycluster-1 29 MB 0/604DF70 Primary OK BestEffort 1.21.0 ip-192-168-2-231.ap-northeast-2.compute.internal

mycluster-2 29 MB 0/604DF70 Standby (sync) OK BestEffort 1.21.0 ip-192-168-3-29.ap-northeast-2.compute.internal

mycluster-3 29 MB 0/604DF70 Standby (sync) OK BestEffort 1.21.0 ip-192-168-1-211.ap-northeast-2.compute.internal

# 클러스터와 반드시 동일한 네임스페이스에 PgBouncer 파드 설치

cat <<EOT> pooler.yaml

apiVersion: postgresql.cnpg.io/v1

kind: Pooler

metadata:

name: pooler-rw

spec:

cluster:

name: mycluster

instances: 3

type: rw

pgbouncer:

poolMode: session

parameters:

max_client_conn: "1000"

default_pool_size: "10"

EOT

kubectl apply -f pooler.yaml && kubectl get pod -w

# 확인

kubectl get pooler

kubectl get svc,ep pooler-rw

# superuser 계정 암호

kubectl get secrets mycluster-superuser -o jsonpath={.data.password} | base64 -d ; echo

nhp8ymj6I7lSQcUk08FJtprwJzRR0ZojdCdx4sQbjjKW61JtrWRrMAioqI1xmzWz

# 접속 확인 : pooler 인증 설정이 적용됨!, 반복 접속 시 파드가 변경되는지? => # Primary 파드로 접속 확인

for ((i=1; i<=3; i++)); do PODNAME=myclient$i VERSION=15.3.0 envsubst < myclient.yaml | kubectl apply -f - ; done

kubectl exec -it myclient1 -- psql -U postgres -h pooler-rw -p 5432 -c "select inet_server_addr();"

pEbH4qk8p2ewe4YNXuBD1griIW7enBCaHCh4YOhwTevkABi9jvCBWfVKE5nRQCB5

inet_server_addr

------------------

10.233.202.213

(1 row)

kubectl get pod -o wide | grep 213

mycluster-1 1/1 Running 0 22m 10.233.202.213 platform-k8s-worker-3 <none> <none

'Kubernetes > Database Operator' 카테고리의 다른 글

| Kafka Operator(Strimzi) - DOIK2_5주차 (0) | 2023.11.19 |

|---|---|

| MongoDB Operator - Percona Operator for MongoDB(PSMDB) - DOIK 4주차 (4) | 2023.11.12 |

| 쿠버네티스 MySQL InnoDB Cluster - DOIK2_2주차 2 (2) | 2023.10.28 |

| 쿠버네티스 오퍼레이터란? (Kubernetes Operator) - DOIK_2주차 1 (2) | 2023.10.28 |

| 쿠버네티스 스테이트풀셋이란? (Kubernetes Statefulset) - DOIK2_1주차 (5) | 2023.10.21 |